Role

Industry

Solutions

Products

We are becoming progressively more reliant on technology in our day-to-day lives, so software testing is taking on an ever more important role in the development of software applications. If the software is unreliable, users end up with more problems than solutions.

At Smartrak, we know that our software is incorporated into time-sensitive applications, and relied on as a tool to manage assets in high-risk environments. This responsibility to provide reliable, and robustly tested software is taken seriously: people's lives depend on it.

Setting Standards

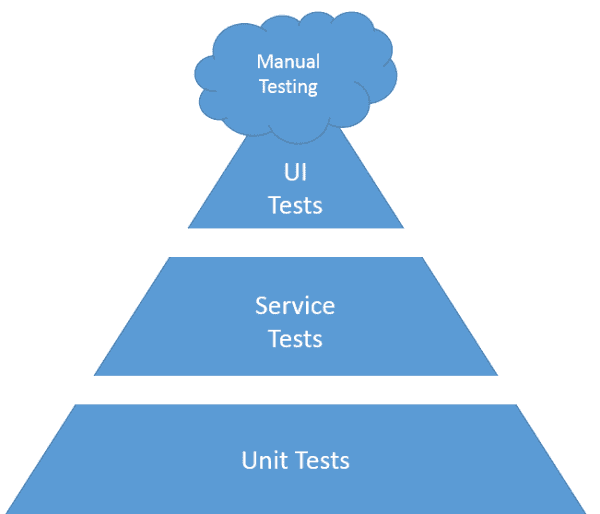

Smartrak uses the testing pyramid to design our test plans. The testing pyramid is widely accepted as the defacto standard for the ratio of the different types of software testing that should be performed on a software application or service.

As we travel up the pyramid, the number of tests at each layer decreases; and the cost in terms of time and effort that goes into designing, running and maintaining the test increases. This trade-off between test cost and the benefit forces Smartrak's Engineering and Quality Assurance teams to be selective about what we test.

This article explores the testing pyramid in more detail, providing real-world scenarios that demonstrate the scope of the tests in each level, and explaining how Smartrak uses the testing pyramid when designing software solutions and implementing tests to ensure that those solutions satisfy the initial brief.

Unit tests

Unit tests are used to test individual blocks (units) of functionality. They provide peace of mind around the building blocks of a system, can be easily automated and are relatively inexpensive to run and maintain.

Because of these qualities, unit tests serve as the foundation of the testing pyramid, and typically number in the thousands, or tens of thousands, depending on the size of the software application.

An example of a real-world scenario that could be covered by a unit test is a checking that your car door can be unlocked, where you test that the door is unlocked using your car key, but it is not unlocked using your house key, garage door remote, or your neighbour's (who happen to have the same car as you) key. Another scenario that could have an equivalent unit test is a discount being applied a supermarket checkout for bringing your own bag; the discount should be applied if you bring in a bag sold by the chain, but not for bringing in a bag sold by a different supermarket.

Smartrak uses unit tests to check the correctness of our business logic, ensure that edge cases and invalid inputs are handled appropriately, and to prevent functionality regression when making changes to our software.

Service tests

Service level tests (which we call integration tests at Smartrak) test the interaction between different components. With integration tests being more expensive to write, run and maintain, Smartrak has to be more selective about what parts of the system we test. They also require more resources than unit tests, so their numbers are lower, generally in the thousands.

A vehicle-based scenario could be: when throttle input is received, and the vehicle is in gear with the handbrake off, the vehicle moves. Another example of an integration test from all of our childhoods is the baking soda and vinegar volcano, where you would test that once the baking soda is combined with a vinegar solution, the outcome is a foamy mass.

At Smartrak, we use integration tests to check that:

When an issue is encountered in our service layer, a 'failing test' is created to reproduce the scenario. Once the fix has been applied, we invert the expected outcome of the test to ensure that if the issue occurs again, we will be notified through an automated process.

User interface tests

User Interface (UI) tests test that the correct interface is displayed and the user can successfully interact with the code on the server. UI tests focus on the visual outcome of an action: that the user received appropriate feedback when an action is performed. They are very time consuming and expensive to run, so the use of UI testing is more selective.

At Smartrak, all users need to be able to login before they can realise the value of our system, so we have user interface tests covering the different login scenarios for our various systems. We also created personas based on the different user levels to determine which functionality was critical for a user of a particular level to be able to do their job. For a map user. it is having events come through in a timely manner after they are created; a client administrator needs to be able to manage users and user permissions, so these are the areas that are tested. A real-world example would be a driver pressing the hazard lights button in their car and observing both that: a) the hazard lights begin to toggle, and b) that the indicator lights on the instrument cluster are illuminated.

Manual testing

Finally, manual testing is done during the development phase by the team writing the software. Once the development is deemed complete, the software is handed off a separate Quality Assurance team that aims to weed out any bugs or user experience issues before the software is shipped and ensure that the brief is met.

The manual testing process is completed in the following scenarios: a major refactoring (reworking the code to improve it but maintain the same behaviour) of a part of the system, a professional services piece of work or critical functionality is concerned (satellite communications, emergency alerts etc).

Although manual testing is extremely time to consume and expensive to conduct, at Smartrak we believe that there is real value in uncovering user experience or workflow issues during this phase. The Quality Assurance team applies a different mindset to the software when they are testing it. They are overly critical, whereas the original engineer/s see their 'baby' as perfect and may afford the software a subconscious bias when performing the initial testing.

Bringing the testing methodology together

Unit tests are run automatically on the software engineers' machines during the development process. By using intelligent tools, only the tests affected by the changes made to the relevant software module are run, rather than the whole test suite. In addition, whenever engineers submit new code, be it features or bug fixes, to our build servers, all of the unit and integration tests are run. To avoid resource contention when running integration tests, we only run these twice a day.

For complex changes or large features, the Quality Assurance team will complete manual testing inside a test environment running against a snapshot of live data before the changes are pushed into the production environment.